spring AI 初步开发

spring AI 极大的简化了对AI的prompt工程。可以区分为用户(user),系统(system),助手(assistant) 三个模块。以及Advisor(拦截器)、Tool(工具)、RAG(检索增强) 、MCP(模型上下文协议)等。

初始化项目:

官方文档:入门 :: Spring AI 中文文档

注: java版本17以上,这里选择21

1

2

3

4

5

6

7

8

9

10

11

| <dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>1.0.0-SNAPSHOT</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| <!-- deepseek 模型-->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-deepseek</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-advisors-vector-store</artifactId>

<version>1.0.0</version>

</dependency>

<dependency>

<groupId>com.esotericsoftware</groupId>

<artifactId>kryo</artifactId>

<version>5.6.2</version>

</dependency>

|

1

2

3

4

5

6

7

8

9

10

| spring:

application:

name: MyAI

ai:

deepseek:

api-key: xxxxxxxxxxxxxxxxxxxxx

base-url: https:

chat:

options:

model: deepseek-chat

|

因为deepseek不支持检索增强所以换了springboot alibaba 的dashscope模型。

一下是springboot alibaba配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| <repositories>

<repository>

<id>spring-milestones</id>

<name>Spring Milestones</name>

<url>https:

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<repository>

<id>aliyunmaven</id>

<name>aliyun</name>

<url>https:

</repository>

</repositories>

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| <dependency>

<groupId>com.alibaba.cloud.ai</groupId>

<artifactId>spring-ai-alibaba-starter</artifactId>

<version>1.0.0-M6.1</version>

</dependency>

<dependency>

<groupId>com.esotericsoftware</groupId>

<artifactId>kryo</artifactId>

<version>5.6.2</version>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-markdown-document-reader</artifactId>

<version>1.0.0-M6</version>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-mcp-client-spring-boot-starter</artifactId>

<version>1.0.0-M6</version>

</dependency>

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| spring:

application:

name: AIFaster

ai:

dashscope:

api-key: xxxxxxxxxxxxxxxxxxxx

chat:

options:

model: deepseek-v3

mcp:

client:

enabled: true

name: mcp-client

version: 1.0.0

type: SYNC

request-timeout: 60000

stdio:

servers-configuration: classpath:/mcp-servers.json

|

chatModel 和 chatClient

Spring AI 中的 ChatModel 和 ChatClient

在 Spring AI 框架中,ChatModel 和 ChatClient 是与 AI 聊天功能相关的两个重要接口,它们有不同的职责和使用场景。

ChatModel

ChatModel 是 Spring AI 中用于与 AI 模型交互的核心接口,主要特点包括:

- 功能定位:

- 提供与底层 AI 模型(如 OpenAI、Anthropic 等)的直接交互能力

- 支持完整的聊天对话功能,包括多轮对话、系统提示等

- 主要方法:

generate() - 生成聊天响应generate(Prompt) - 根据提示生成响应- 支持流式和非流式响应

- 使用场景:

- 需要精细控制 AI 模型交互时

- 需要访问底层模型的高级功能时

- 需要直接处理 Prompt 和 Response 对象时

ChatClient

ChatClient 是一个更高级别的抽象接口,主要特点包括:

- 功能定位:

- 提供更简单的 API 用于聊天交互

- 隐藏了部分底层复杂性,更适合简单场景

- 主要方法:

call(String message) - 发送消息并获取响应- 通常返回简单的字符串响应

- 使用场景:

- 快速实现简单的聊天功能

- 不需要复杂配置或高级功能时

- 希望简化代码时

–deepseek

简单来说使用chatModel是直接对AI接口发起请求的角色,而chatClient可以在这之前和之后添加很多方便快捷的操作。

直接用chatModel发起请求:

1

2

3

4

5

| @GetMapping("/simple/chat")

public String simpleChat() {

return dashScopeChatModel.call(new Prompt(DEFAULT_PROMPT)).getResult().getOutput().getText();

}

|

配置chatClient发送请求:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

| private ChatClient chatClient;

private final ChatModel dashScopeChatModel;

public DashScopeChatModelImpl(ChatModel chatModel) {

this.dashScopeChatModel = chatModel;

this.chatClient = ChatClient.builder(this.dashScopeChatModel)

.defaultSystem(new SimplePrompt().getDefaultPrompt())

.defaultAdvisors(

)

.build();

}

@Override

public String generate(String message) {

SimpleResponse simpleResponse = chatClient.prompt().advisors(advisor->advisor.param(CHAT_MEMORY_CONVERSATION_ID_KEY,"002").param(CHAT_MEMORY_RETRIEVE_SIZE_KEY,100)).advisors(new QuestionAnswerAdvisor(vectorStore)).tools(toolCallbackProvider).user(message).call().entity(SimpleResponse.class);

return simpleResponse.toString();

}

@Override

public Flux<ChatResponse> generateStream(String message) {

Flux<ChatResponse> streamWithMetaData = chatClient.prompt().advisors(advisorSpec -> advisorSpec.param(CHAT_MEMORY_CONVERSATION_ID_KEY,"678").param(CHAT_MEMORY_RETRIEVE_SIZE_KEY,10)).user(message).stream().chatResponse();

return streamWithMetaData;

}

|

######################

系统(System)、用户(user)

系统相当于出了用户发送的内容外你希望这个模块做到什么功能。比如医疗系统的AI就可以在系统那里写上。你是一名资深的医生…..。 用户就是用户发送的内容。

上下文连续对话(chatMemory)

聊天记忆 :: Spring AI 中文文档

基于内存可以使用 InMemoryChatMemoryRepository();

这里使用了kryo去保存和读取上下文。

1

2

3

4

5

6

7

8

9

10

11

| private String fileDir = System.getProperty("user.dir")+"/chat-memery";

ChatMemory chatMemory =new FileBasedChatMemory(fileDir);

public DashScopeChatModelImpl(ChatModel chatModel) {

this.chatClient = ChatClient.builder(this.dashScopeChatModel)

.defaultSystem(new SimplePrompt().getDefaultPrompt())

.defaultAdvisors(

new MessageChatMemoryAdvisor(chatMemory),

)

.build();

}

|

通过参考InMemoryChatMemoryRepository()基于内存的实现方法去实现FileBasedChatMemory

流式响应Flux

Flux 和 Mono 、reactor实战 (史上最全) - 技术自由圈 - 博客园

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| public Flux<ChatResponse> generateStream(String message) {

Flux<ChatResponse> streamWithMetaData = chatClient.prompt().advisors(advisorSpec -> advisorSpec.param(ChatMemory.CONVERSATION_ID,"678")).user(message).stream().chatResponse().doOnNext(chatResponse -> {

AssistantMessage assistantMessage = chatResponse.getResult().getOutput();

String text = assistantMessage.getText();

log.info(text);

});

log.info("执行完毕");

return streamWithMetaData;

}

|

利用doOnNext取获取信息。

这里有3个概念,发布者,订阅者,订阅。感觉有点类似与消息队列那种,只有有订阅者(消费者)订阅 publisher才有用

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

| public class FileBasedChatMemory implements ChatMemory {

private final String BASE_DIR;

private static final Kryo kryo = new Kryo();

static {

kryo.setRegistrationRequired(false);

kryo.setInstantiatorStrategy(new StdInstantiatorStrategy());

}

public FileBasedChatMemory(String dir) {

this.BASE_DIR = dir;

File baseDir = new File(dir);

if (!baseDir.exists()) {

baseDir.mkdirs();

}

}

@Override

public void add(String conversationId, Message message) {

ChatMemory.super.add(conversationId, message);

}

@Override

public void add(String conversationId, List<Message> messages) {

List<Message> conversationMessages = getOrCreateConversation(conversationId);

conversationMessages.addAll(messages);

saveConversation(conversationId, conversationMessages);

}

@Override

public List<Message> get(String conversationId, int lastN) {

List<Message> allMessages = getOrCreateConversation(conversationId);

return allMessages.stream()

.skip(Math.max(0, allMessages.size()-lastN))

.toList();

}

@Override

public void clear(String conversationId) {

File file = getConversationFile(conversationId);

if (file.exists()) {

file.delete();

}

}

private List<Message> getOrCreateConversation(String conversationId) {

File file = getConversationFile(conversationId);

List<Message> messages = new ArrayList<>();

if (file.exists()) {

try (Input input = new Input(new FileInputStream(file))) {

messages = kryo.readObject(input, ArrayList.class);

} catch (IOException e) {

e.printStackTrace();

}

}

return messages;

}

private void saveConversation(String conversationId, List<Message> messages) {

File file = getConversationFile(conversationId);

try (Output output = new Output(new FileOutputStream(file))) {

kryo.writeObject(output, messages);

} catch (IOException e) {

e.printStackTrace();

}

}

private File getConversationFile(String conversationId) {

return new File(BASE_DIR, conversationId + ".kryo");

}

}

|

最后在chatClient发送请求时添加对应的上下文记忆大小,对话id等即可

1

| chatClient.prompt().advisors(advisor->advisor.param(CHAT_MEMORY_CONVERSATION_ID_KEY,"002").param(CHAT_MEMORY_RETRIEVE_SIZE_KEY,100))

|

Advisor(拦截器)

Advisor API :: Spring AI 中文文档

用于在发起请求前后做的操作。

日志拦截器(用于日志输出发送和返回的propt和response)

1

2

3

4

5

| this.chatClient = ChatClient.builder(this.dashScopeChatModel)

.defaultSystem(new SimplePrompt().getDefaultPrompt())

.defaultAdvisors(

new MyLoggerAdvisor()

)

|

实现

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

| @Slf4j

public class MyLoggerAdvisor implements CallAroundAdvisor, StreamAroundAdvisor {

@Override

public AdvisedResponse aroundCall(AdvisedRequest advisedRequest, CallAroundAdvisorChain chain) {

advisedRequest = this.before(advisedRequest);

AdvisedResponse advisedResponse = chain.nextAroundCall(advisedRequest);

this.observeAfter(advisedResponse);

return advisedResponse;

}

@Override

public Flux<AdvisedResponse> aroundStream(AdvisedRequest advisedRequest, StreamAroundAdvisorChain chain) {

advisedRequest = this.before(advisedRequest);

Flux<AdvisedResponse> advisedResponses = chain.nextAroundStream(advisedRequest);

return (new MessageAggregator()).aggregateAdvisedResponse(advisedResponses,this::observeAfter);

}

@Override

public String getName() {

return this.getClass().getSimpleName();

}

@Override

public int getOrder() {

return 0;

}

private AdvisedRequest before(AdvisedRequest request) {

log.info("AI Request: {}", request.adviseContext());

return request;

}

private void observeAfter(AdvisedResponse advisedResponse) {

log.info("AI Response: {}", advisedResponse.response().getResult().getOutput().getText());

}

}

|

RAG(检索增强)

检索增强生成 :: Spring AI 中文文档

通过向量存储知识库用来快速匹配到对应内容然后发送给AI。(利用向量的数学方法在空间上快速匹配,离得越近匹配度越高)。

这里使用md作为知识库数据

引入springai md 读取依赖

1

2

3

4

5

| <dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-markdown-document-reader</artifactId>

<version>1.0.0-M6</version>

</dependency>

|

配置vector 向量存储

1

2

3

4

5

6

7

8

9

10

11

12

13

| @Configuration

public class VectorConfig {

@Resource

MyMarkdownReader myMarkdownReader;

@Bean

public VectorStore vectorStore(EmbeddingModel embeddingModel) {

SimpleVectorStore simpleVectorStore = SimpleVectorStore.builder(embeddingModel).build();

List<Document>markDownDocs = myMarkdownReader.loadMarkdown();

simpleVectorStore.add(markDownDocs);

return simpleVectorStore;

}

}

|

读取本地md文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

| @Slf4j

@Component

public class MyMarkdownReader {

private final ResourcePatternResolver resourcePatternResolver;

MyMarkdownReader(ResourcePatternResolver resourcePatternResolver){

this.resourcePatternResolver= resourcePatternResolver;

}

public List<Document> loadMarkdown() {

List<Document> allDocs= new ArrayList<>();

try {

Resource[] resources = resourcePatternResolver.getResources("classpath:doc/*.md");

for (Resource resource : resources) {

String fileName = resource.getFilename();

MarkdownDocumentReaderConfig config = MarkdownDocumentReaderConfig.builder()

.withHorizontalRuleCreateDocument(true)

.withIncludeCodeBlock(false)

.withIncludeBlockquote(false)

.withAdditionalMetadata("filename", fileName)

.build();

MarkdownDocumentReader reader = new MarkdownDocumentReader(resource, config);

allDocs.addAll(reader.get());

}

} catch (IOException e) {

log.info("加载markdown 文件失败",e);

throw new RuntimeException(e);

}

return allDocs;

}

}

|

使用时在拦截器advisor里添加QuestionAnswerAdvisor即可

1

2

3

4

5

6

| @Resource

private VectorStore vectorStore;

SimpleResponse simpleResponse = chatClient.prompt().advisors(new QuestionAnswerAdvisor(vectorStore)).user(message).call().entity(SimpleResponse.class);

|

MCP

模型上下文协议(MCP) :: Spring AI 中文文档

使用MCP就可以调用其他人基于MCP协议提供的服务.这里就是简单的使用MCP去调用高德地图提供的MCP。

首先得去高德地图获取key。高德开放平台 | 高德地图API

引入依赖

1

2

3

4

5

| <dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-mcp-client-spring-boot-starter</artifactId>

<version>1.0.0-M6</version>

</dependency>

|

修改yml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| spring:

application:

name: AIFaster

ai:

dashscope:

api-key: xxxxxxxxxxxxxxxxxxxxxxxxxxxx

chat:

options:

model: deepseek-v3

mcp:

client:

enabled: true

name: mcp-client

version: 1.0.0

type: SYNC

request-timeout: 60000

stdio:

servers-configuration: classpath:/mcp-servers.json

|

servers-configuration 里 存放对应key的配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| {

"mcpServers": {

"amap-maps": {

"command": "npx.cmd",

"args": [

"-y",

"@amap/amap-maps-mcp-server"

],

"env": {

"AMAP_MAPS_API_KEY": "xxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

}

}

}

}

|

使用:

1

2

3

4

5

6

7

| @Resource

private ToolCallbackProvider toolCallbackProvider;

@Override

public String generateMcp(String message) {

String res = chatClient.prompt().advisors(advisor->advisor.param(CHAT_MEMORY_CONVERSATION_ID_KEY,"003").param(CHAT_MEMORY_RETRIEVE_SIZE_KEY,100)).tools(toolCallbackProvider).user(message).call().content();

return res;

}

|

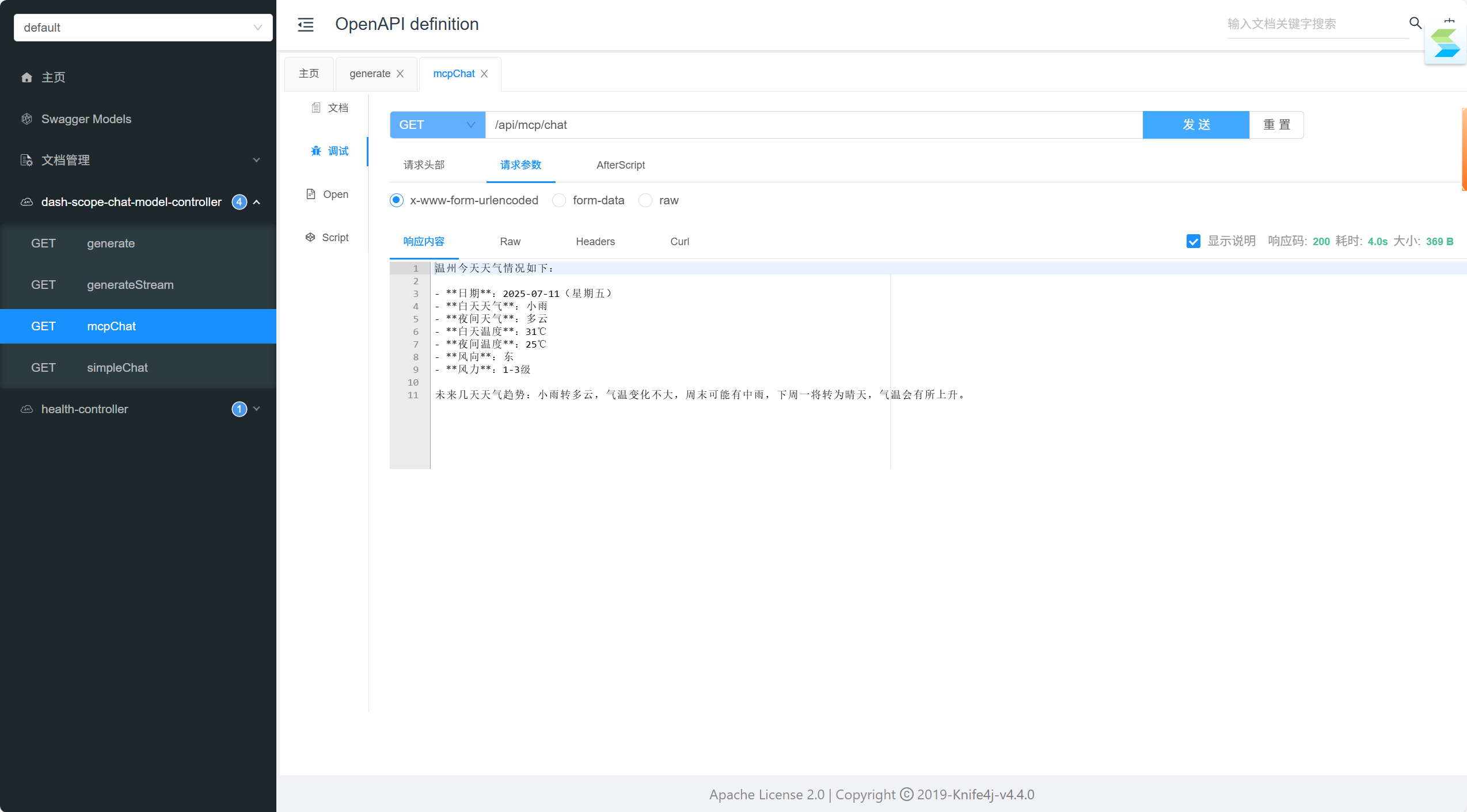

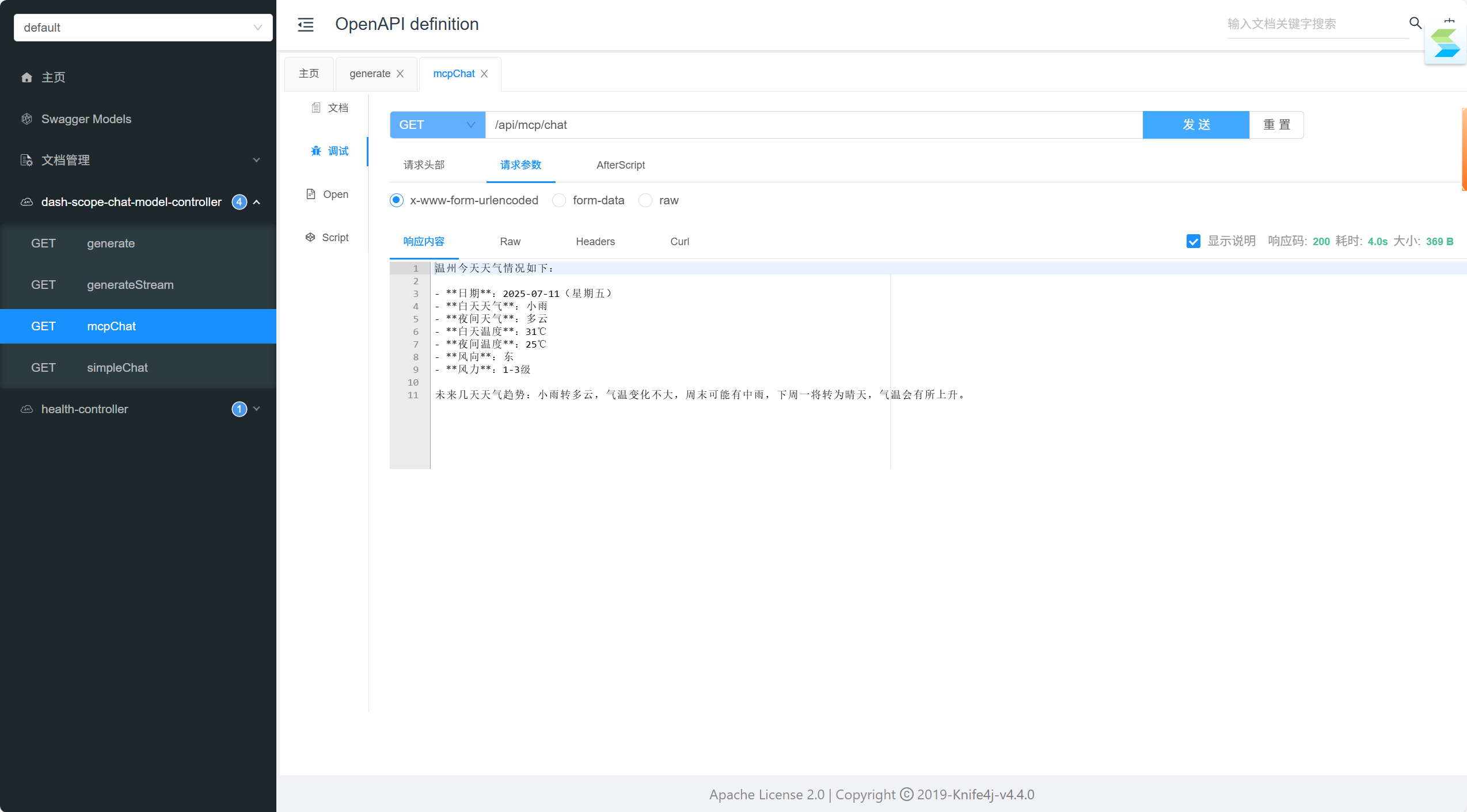

效果:

Tool Calling :: Spring AI Reference

除了MCP提供的工具以外也可以自己写工具。

//TODO